The Most

Cost-Effective

GPU Cloud for

Global AI Scaling.

Deploy high-performance GPU pods with unmatched price-to-performance. Leverage a globally distributed network designed for seamless training and production-grade inference.

Cut Your GPU

Expenses by 50%.

We bridge the gap between expensive hyperscalers and unreliable providers. Our infrastructure is optimized to provide maximum stability at half the cost.

NVIDIA RTX 4090

Vision & Inference

NVIDIA A100 (80GB)

LLM Fine-tuning

NVIDIA H100 (80GB)

Massive-scale Training

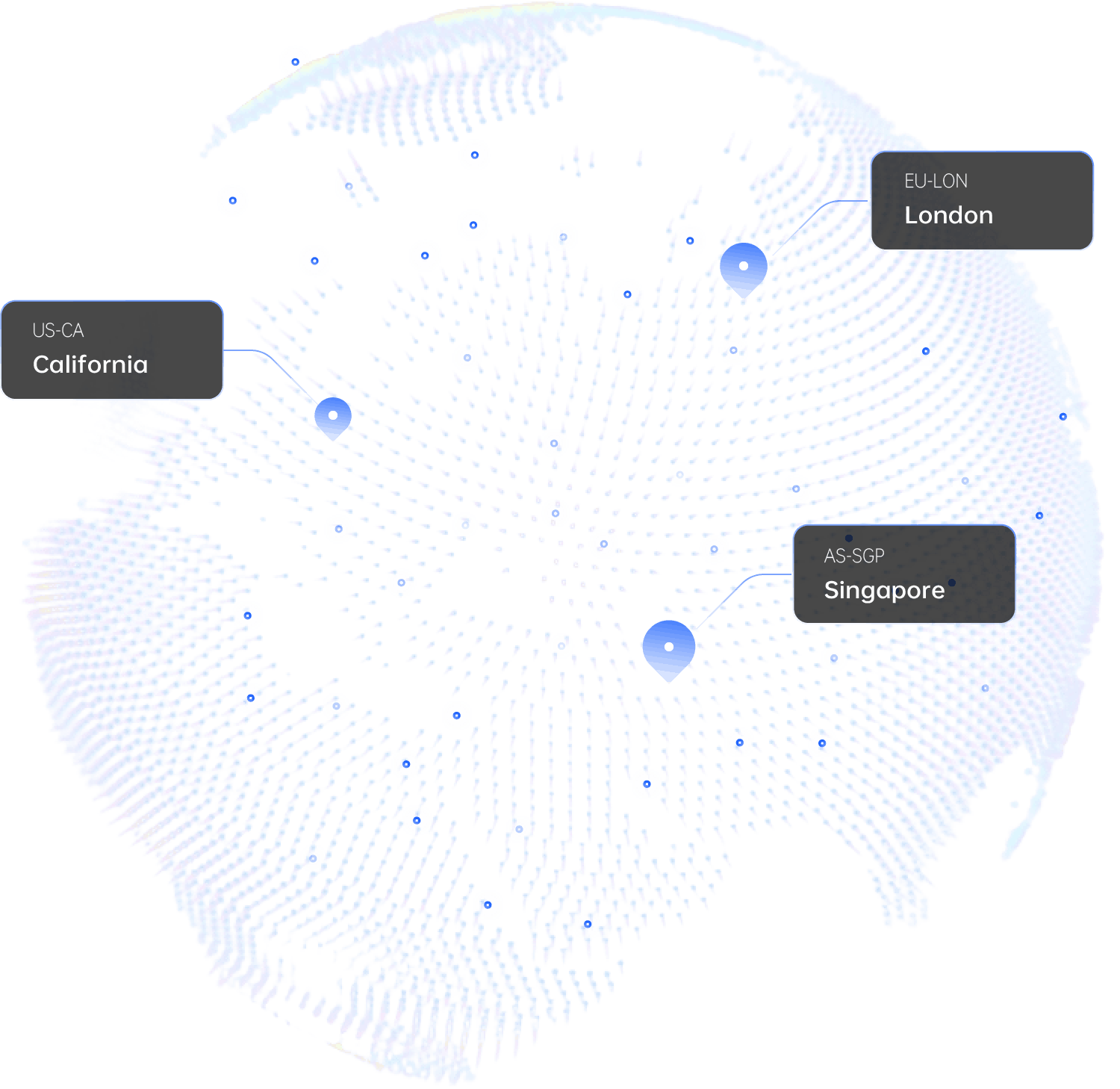

Distributed,

Low-Latency.

Our infrastructure spans 5 key global regions, interconnected via private dark fiber to ensure your AI models respond instantly from anywhere on Earth.

GPU Pods: Developer-Centric Infrastructure

Performance-tuned for the modern AI stack

True Pay-As-You-Go

Granular hourly billing with no long-term commitments or hidden overhead. Cost scales exactly with your execution time.

Shared Network Volumes

Decouple your data from compute. Mount a single Network Volume to multiple GPU Pods simultaneously to share datasets and models across your fleet.

Zero-Cost System Storage

Every instance includes a complimentary high-performance OS disk to accelerate your initial deployment. No hidden storage line items.

Image Pre-warming

Automatic pre-warming for custom Docker Hub images ensures near-instantaneous boot times even for multi-gigabyte environments.

Pre-Optimized AI Environments

From Zero to Deployment in One Click. Skip the environment setup.

LLM & Inference

vLLM-DeepSeek-R1-Distill

Cuda 12.1Deep Learning

PyTorch 1.8.1 - 2.5.0

TensorFlowCreative AI

ComfyUI Standard

GenerativeVoice & Audio

RVC (AI Cover Singing)

VibeVoice-7BTurnkey Tooling: Immediate access via secure SSH or integrated JupyterLab environments, performance-tuned for the modern AI stack.

Serverless:

Zero-Management.

Predictive Scaling

AI-driven workload prediction ensures your capacity is ready before the spike hits, maintaining perfect performance without manual tuning.

Edge-Ready Routing

Traffic is routed to the nearest available GPU node, slashing TTFT (Time to First Token) for real-time generative AI applications.

Autoscale in

seconds

Instantly respond to demand with GPU workers that scale from 0 to 1000s in seconds. Never pay for idle capacity again.

Zero cold-starts

active workers

Always-on GPUs for uninterrupted execution. Achieve millisecond latency for your most demanding AI inference tasks.